Predicting a Winner

I was given an assignment for my computational statistics course that posed an interesting challenge: "Using only data that is freely available on the internet, could you have predicted the winner of the 2014 FIFA World Cup final between Germany and Argentina?" This post outlines my approach, results, and thoughts on sports prediction generally.

The first interpretation of the question that came to mind, and the one I chose to explore, was "If you had tried to predict the winner the evening before the game, would you have been successful?". My general approach was to gather data that would have been available before the date of the match, choose predictors and build a linear regression model, then test and refine the model until arriving at a satisfactory balance of accuracy and parsimony[1].

Before gathering data I had to think about how my model would be structured, and to do that I needed to think about the response (or dependent variable) and how it would be represented. Three possibilities:

- I could represent win/loss per game as a binary variable for logistic regression - a 1 indicates the Home team winning, and a 0 indicates the home team losing. The main problem with this method is that it doesn't account for tie games.

- I could represent home win/away win/tie as three different categories, and use multinomial regression or ordinal regression[2]

- I could count the number of goals per game (which probably follows a Poisson distribution) and do a Poisson regression on this. We can derive the game win/tie/loss from comparing two scores. However its slightly more complex and a little indirect to build a model that predicts scores when our real goal is win/loss.

I chose to go with a binary variable, mostly because the other two methods hadn't yet been covered in my statistics course. I would use a '1' to represent a home team win and a '0' to represent away team win, and I would drop tie games from my data-set as the model didn't have a way to account for them.

I also needed to make sure my model was symmetric, by this I mean that it doesn't matter which team was home or away. I did this by representing each predictor as ('home team value' - 'away team value'). For a predictor such as say, team ranking, if the home team had a higher ranking then our value would be positive, and if the away team had a higher ranking then it would be negative, and hence symmetrical around 0.

Choosing predictors

I came up with 9 predictors to test for a relationship with win/loss prediction:

- Home team advantage: It has been shown (and makes sense intuitively) that a team

will perform better when playing in their home stadium. This is a categorical variable which is either "HOME" (Home team has home team advantage), "NONE" (neither has home team advantage) or "AWAY" (away team has home team advantage). - Distance team has travelled from their home capital:

This is of similar relevancy to home/away but applies across even teams that are both playing ‘away’. The idea being that the less a team has to travel for a game, the more rested they will be, the more fans will be there to cheer them on, and the more familiar they will be with the conditions. - Recent possession: Possession is calculated by taking the average

possession percentage of the home team in the games played during FIFA 2014. - FIFA ELO ranking: This takes into account the recent performance of the team and is a proxy for ‘how well has this team been performing’ in the last 4 years (recently). These stats were published by FIFA the month before the World Cup took place.

- Change in teams ranking over past 12 months: That is, a team ‘on the up’ may be seen as more likely to win. The rankings in the previous predictor were compared to the rankings for the same teams in June 2013.

- Average player age: Age may be relevant as a proxy for player experience, and was calculated by averaging the ages of the players in each team.

- Average player height: May represent the ‘vitality’ of players in a similar way to height in basketball (although probably less relevant if you’re not jumping for a hoop).

- Number of players nominated for a Ballon d’Or: The Ballon d’Or is an annual trophy given by FIFA to the ‘world’s best male player.’ There are 20 nominees each year and the number of players nominated for this award acts as a good proxy for how many ‘star players’ a team has.

- Average goals scored recently: The average number of goals scored across the first 63 matches in FIFA is a good measure for recent team performance.

- Average concessions recently: Similar to average goals, this also is a measure of recent team performance.

Then I gathered my data from various sources and transformed it into a frame of team statistics:

Which I then transformed into a final dataframe including the result of each of the 63 games played up to the final game in 2014, along with our constructed predictors. It went fairly smoothly, with only a few issues joining data with different spellings, such as Colombia/Columbia. Code for this is in the pdf linked below.

Model analysis and Eliminating Predictors

Before fitting any model, I did a pairwise plot of all the predictors to test for multicollinearity. That's a big word that just means a relationship between independent variables/predictors. This is bad because it makes a model very sensitive to minor changes and can essentially undermine/invalidate the statistical tests used for significance.

I could see a relationship between avg goals, avg concessions, and FIFA_ranking. This is probably because all three were based on the same underlying information - how many goals a team had scored in recent history. I dropped goals and concessions and kept the FIFA ranking.

I was now down to 8 predictors, and the next step is testing to see which ones have the strongest relationship with what we are trying to predict. I did this in two ways, the first by drawing scatterplots of the response vs predictors to see visually if it looked like a linear relationship.

I also fit the model and looked at statistical test results to see which were thought to be significant.

The important part of that ream of numbers is the column of p values on the right hand side, as well as stars and dots to indicate significance. From this it seemed like only three predictors were significant by a 95% confidence test, Distance travelled, FIFA rank, and Award nominated players. I re-plotted these three predictors:

And fit a new model:

FIFA rank didn't seem significant any more, but I kept it in the model. The model without it had only distance travelled and award nominated players, and intuitively this model could be better. Imagine two teams from nearby countries, each with no players nominated for the award, and yet with very different FIFA ranks. The rank should be included as it tells us something valuable about the skill of a team that the other two don't entirely capture.

So how do we interpret this model?

It says that three main factors give the best estimate (found by us) of which team will win a game of soccer. They boil down to:

- The general skill level of a team, represented in the FIFA ranking

- The number of 'star players' in a team, separate to the average skill of a team. Important because there are a subset of players that are considered a level above most in terms of ability, and those players can heavily swing a game on their own.

- The match specific distance travelled by team to stadium. This represents environmental factors; as mentioned above, a team that is playing at home has more fans to boost morale, is more rested, and more familiar with the terrain. This is a big enough factor to matter.

Results

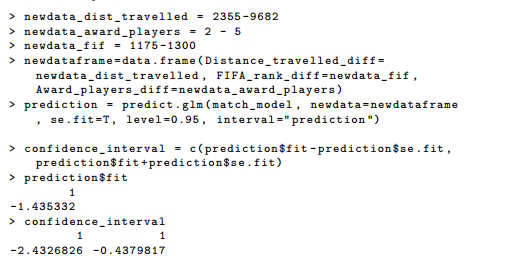

After constructing this model, our goal was to predict the result of the final game. I plugged in the values for Argentina vs Germany:

The model indicates with 95% confidence that the log odds will be below 0 - this is equivalent to predicting that the away team wins in our model. Thus the model successfully predicts the winner of the 2014 FIFA World Cup would be the away team, Germany.

Final thoughts

There were a few limitations to the model, the most glaring being that it couldn't handle tie games, and that I only had 54 data points after cutting out the last game and tie games. They could be fixed fairly easily though, by spending more time collecting data over a longer period of time, and by changing the response to a categorical or Poisson response. One interesting extension that I'd like to make before the next FIFA World Cup is to use bookie odds and invert them to calculated an expected payout. That way instead of optimising the model over which team is most likely to win, it could be trained on which has the best expected payoff - maybe encouraging us to bet on underdogs if we disagree with the bookies. I look forward to comparing the performance of this model with the actual results next cup.

As for the questions I set out to answer at the beginning of this:

Could I have predicted the winner?

Surprisingly, yes, the model actually worked and I would've made the same choices and used the same data had I been doing it the night before the game.

Is this sustainable?

My gut initially said no, it's impossible to beat the bookies. However this doesn't seem to be true. In practice, there are professional gamblers, and more, firms of mathematicians hired specifically to do this sort of thing and make bets on the outcomes of games[3]. In baseball it's called Sabermetrics, and is also commonly used by baseball teams who are recruiting and want more confidence selecting their players.

It isn't likely for the average individual to make money in the long run betting on things like sports, but with effort invested, extra information found and utilised, and employment of mathematical techniques, you can indeed get enough of an edge to be profitable.

More resources if you're interested in reading more technical details:

If you have questions about how I did this, or problems that might be solved by this type of analysis, my email is floating around the website - don't hesitate to get in touch. (Disclaimer: I have no intention to leave my current job at Amazon - contact me with interesting projects, not job offers)

Notes

Parsimony relates to the number of predictors - a model with two independent variables is easier to understand and interpret than one with ten. Including too many predictors in the model can also result in over-fitting or interaction between terms. ↩︎

Multinomial regression is a type of classification that allocates each data-point to one of n categories. Predicting things like nationality, type of drink, etc are best done using multinomial regression. Ordinal regression is an extension of multinomial regression in which the categories are ordered - this adds extra information that can be used to improve the model. An example of ordered categories might be win/tie/loss in the case of our model, tax brackets, or the Likert-type scale[4]. ↩︎

Generally in a real life setting this is done with a combination of both a statistical model as well as human judgement. Professional 'Odds Setters' are hired to take the output of the statistical models, and then adjust it to account for things such as a last minute injury to a star player. ↩︎

If you don't recognise the name 'Likert-type Scale', you've seen it many times before; the five categories in the Likert-type scale are 'Strongly Agree' through to 'Strongly Disagree' and they appear on just about every survey in the history of ever. ↩︎